NVIDIA’s Jetson series of modules has always brought an exciting amount of processing power to mobile and edge AI applications—this being their intended use case. The Jetson lineup also includes several developer kits: modules on reference carrier boards in a format quite similar to single board computers. For the sake of simplicity, we’ll even call these boards “SBCs” for the rest of this review. Let’s not dwell on the semantics for too long—if it looks like a duck and quacks like a duck, it probably is a duck.

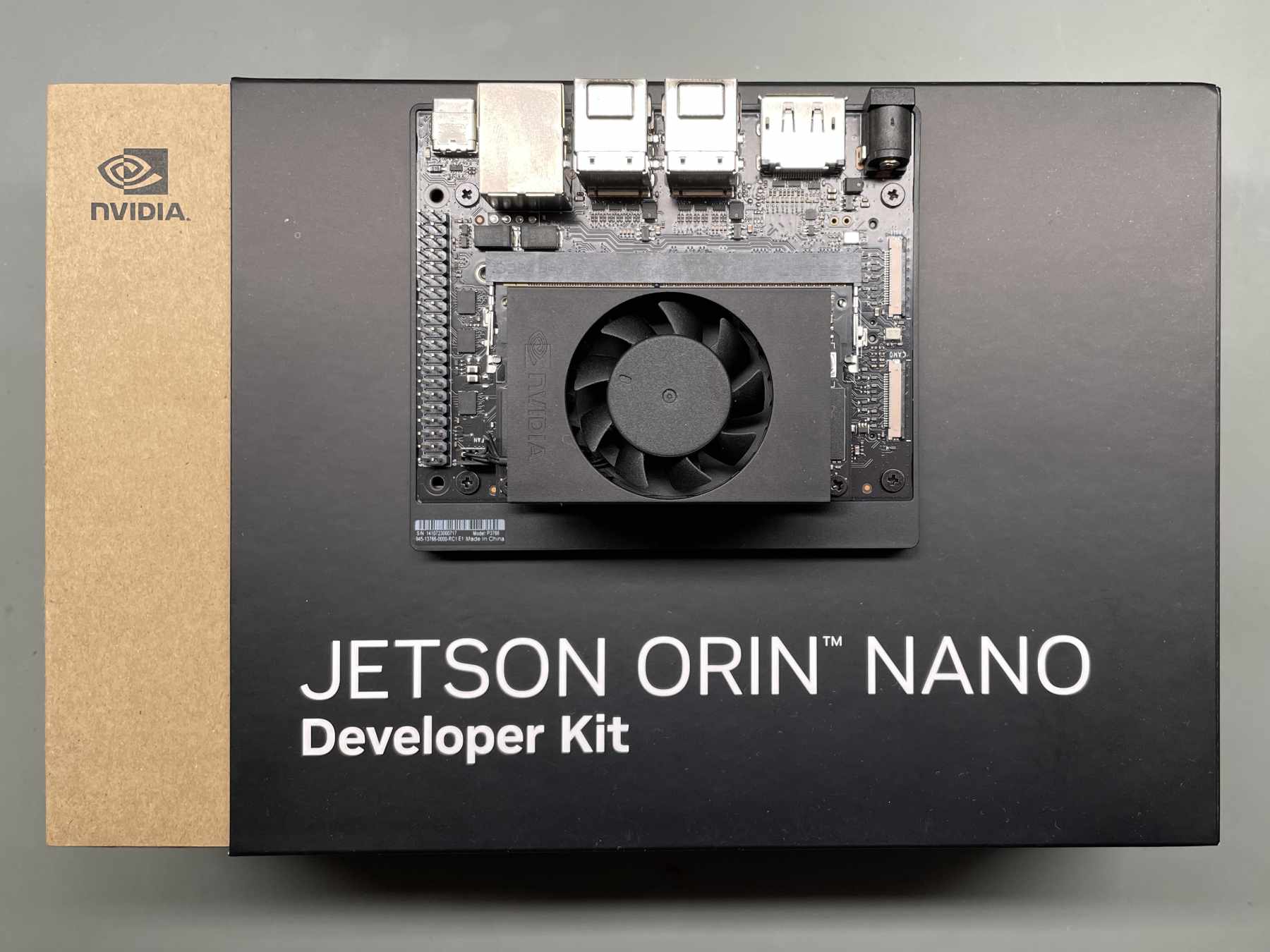

The SBC we’re taking a look at today is NVIDIA’s new Jetson Orin Nano Developer Kit, which was announced this March at NVIDIA’s GTC 2023 event. The module it’s based on has been around slightly longer but has only just now made it into the SBC format. Designed for rapid prototyping, it brings a powerful set of AI hardware and software in a standalone form factor.

The kit sitting on top of its nifty box

While some Jetson kits retail for up to $1999, like the mighty AGX Orin Developer Kit, the new Orin Nano kit retails for a much more affordable $499, making it accessible to developers and more serious makers. The previous generation Jetson Nano Developer Kit (we’ll compare the two in a later section) started at $149, making it extremely popular within the education and maker communities (there was also, now sadly discontinued, a trimmed-down version of the older Nano selling for just $59).

This does make the new Jetson Orin Nano quite a bit more expensive than its predecessor, but according to NVIDIA’s latest Jetson family chart, it’s not really meant to replace the $99 Nano but instead to bridge the gap between it and the AGX Orin. This makes a lot of sense; the performance difference between the two Nanos is much larger than what would be expected from a generational upgrade, if this were one.

So, somewhat confusingly, there are now two Jetson Nano developer kits on the market, filling different niches. This has entertainingly already caused some confusion on the Jetson Forums, but it’s not too bad of a naming scheme either.

Before moving on, we’d like to thank NVIDIA for providing us with a Jetson Orin Nano board free of charge for review purposes. NVIDIA also provided us with pre-release software for the Orin Nano. This software still had a few quirks, which were all fixed in JetPack 5.1.1, the first production software release.

An overhead shot of the system

Specs

| Jetson Orin Nano module specifications | |

| CPU | 6-core Arm Cortex-A78AE v8.2 64-bit CPU at 1.5 GHz |

| GPU | NVIDIA Ampere architecture with 1024 CUDA cores and 32 Tensor cores |

| RAM | 8 GB 128-bit LPDDR5 |

| Storage | External microSD or NVMe storage |

| Power consumption | Configurable 7 W to 15 W |

Let’s take a look at the Orin Nano Module first. It features a 6-core Arm Cortex-A78AE CPU and a serious Ampere-based GPU setup with 1028 CUDA cores and 32 third-generation Tensor cores. This makes it computationally the most powerful SoC we’ve seen on an embedded system so far, offering unparalleled performance for the price. Some keen-eyed readers might have noticed an “AE” suffix up there. This denotes a special core architecture for mission-critical tasks, offering unique execution modes capable of achieving SIL 3 safety for use in essential industrial applications. Interestingly enough, the Tegra Orin series of chips is reportedly the first lineup of SoCs to license this core – the Orin Nano truly is at the forefront of tech here.

There’s 8 GB of LPDDR5 RAM on board, shared between the CPU and GPU, offering a memory bandwidth of 68 GB/s. This isn’t as fast as the GDDR5 or HBM2 RAM often used in discrete GPUs, but it also shouldn’t present any bottlenecking issues.

Finally, like on all Jetson Nano development modules, an SD card slot is present. It is curiously not located on the carrier board but right on the module, presumably to better emulate production models’ embedded flash.

| Carrier board specs | |

| Camera connectors | 2 x MIPI CSI-2 22-pin |

| M.2 slots | 2 x M.2 Key M 1 x M.2 Key E |

| USB | 4 x USB 3.2 Gen2 Type A 1 x USB C for debug and flashing |

| Networking | 1 x Gigabit Ethernet connector |

| Video output | 1 x DisplayPort 1.2 (+MST) |

| Other | 40-pin Pi-compatible GPIO header 12-pin button header 4-pin fan header DC power jack |

| Dimensions | 100 mm x 79 mm x 21 mm |

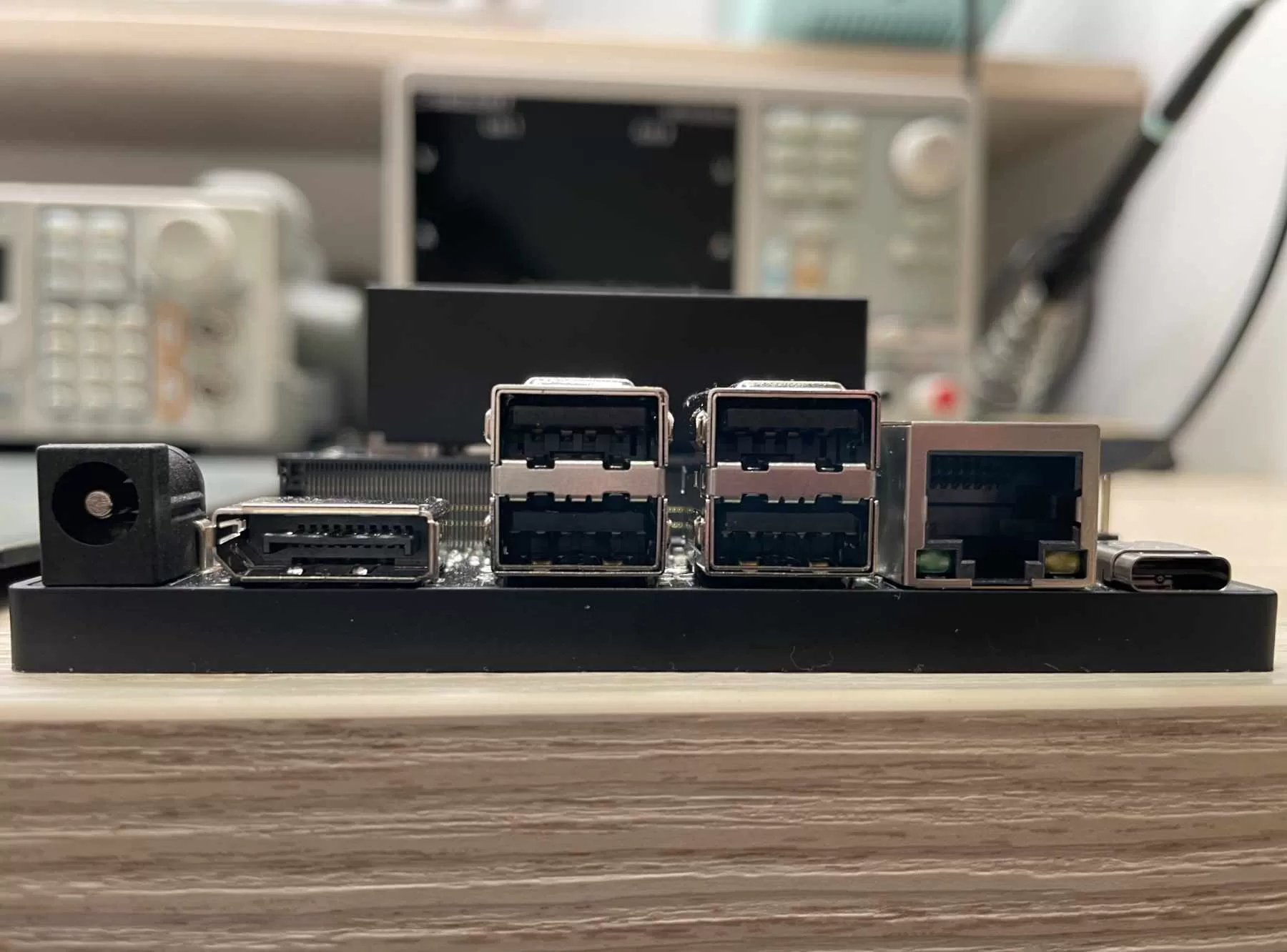

The carrier board is also full of features, exposing an exceptionally rich IO set. Two MIPI CSI-2 camera connectors run along the right of the board (okay, well, right when oriented with the serial number sticker facing the user, which in turn renders the silkscreen text upside-down; this is the way the system is drawn on the box graphics too, so we really don’t know the “official” stance here). Four USB 3.2 Gen2 ports are situated along the top, as are a 1 Gbps Ethernet port, one DisplayPort 1.2 port (with multi-stream transport, meaning multiple displays are supported from one port), one 19 V DC power barrel jack, and one USB-C connector for flashing and debugging.

The kit’s top side contains most of the everyday ports

USB 3.2 Gen2 ports each offer up to a 10 Gbps transfer rate (let’s not get into the whole USB naming scheme rant again), which adds up to a very respectable amount of USB throughput on an SBC. The Ethernet port could have maybe been a 2.5 Gbps one, but we can’t complain too much about a gigabit port. Curiously, there’s no HDMI out like on the original Jetson Nano, which might warrant a quick run to the store. DP-to-HDMI adapters are reasonably cheap and simple to find, so it shouldn’t be a huge hassle overall.

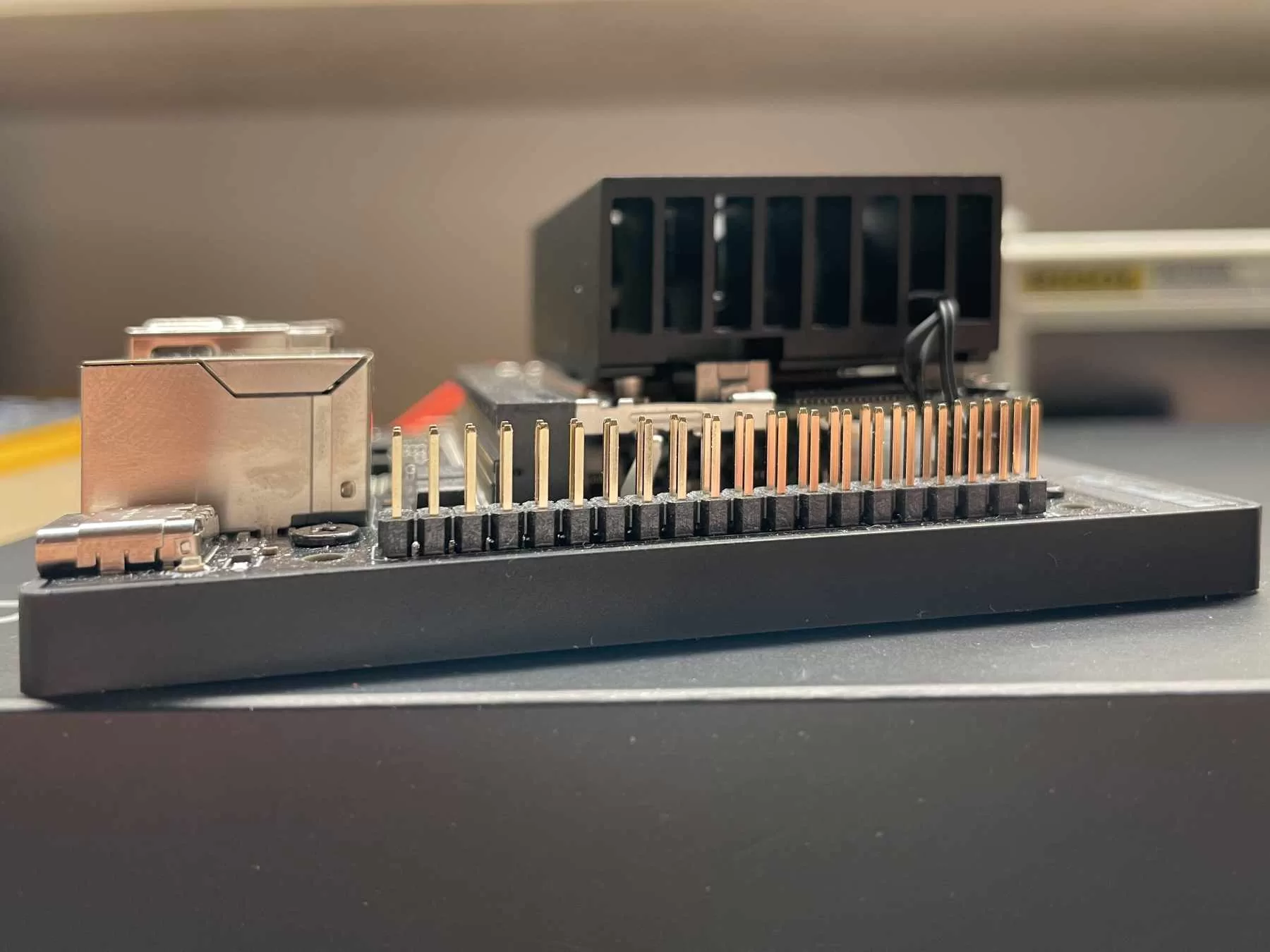

The left side of the board features a Pi-compatible GPIO comb. Although pin-to-pin compatible with the Raspberry Pi, HATs for the latter won’t fit onto the Nano for rather obvious physical reasons: the SoC heatsink is in the way.

The GPIO header looks just like the Raspberry Pi one…

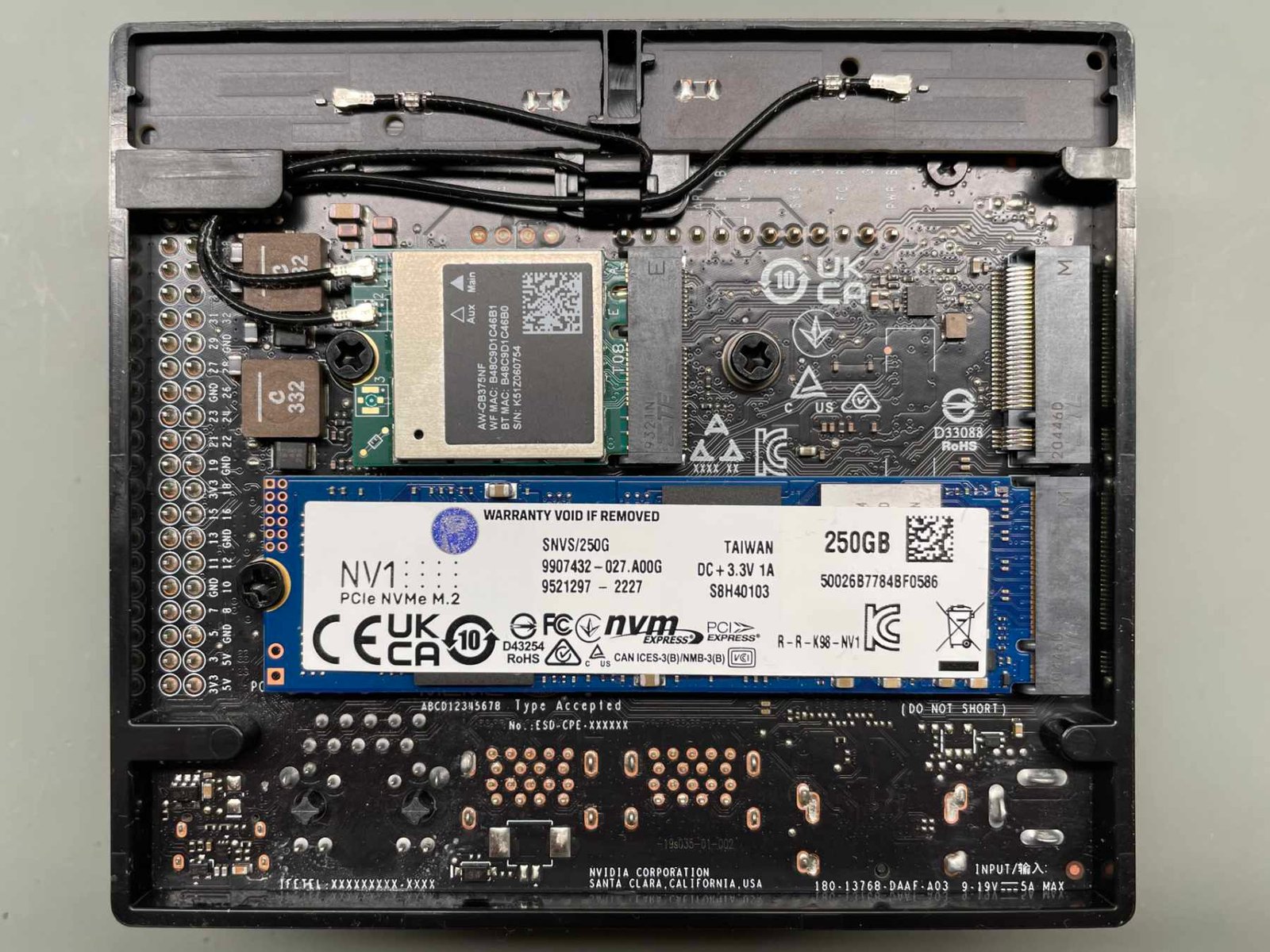

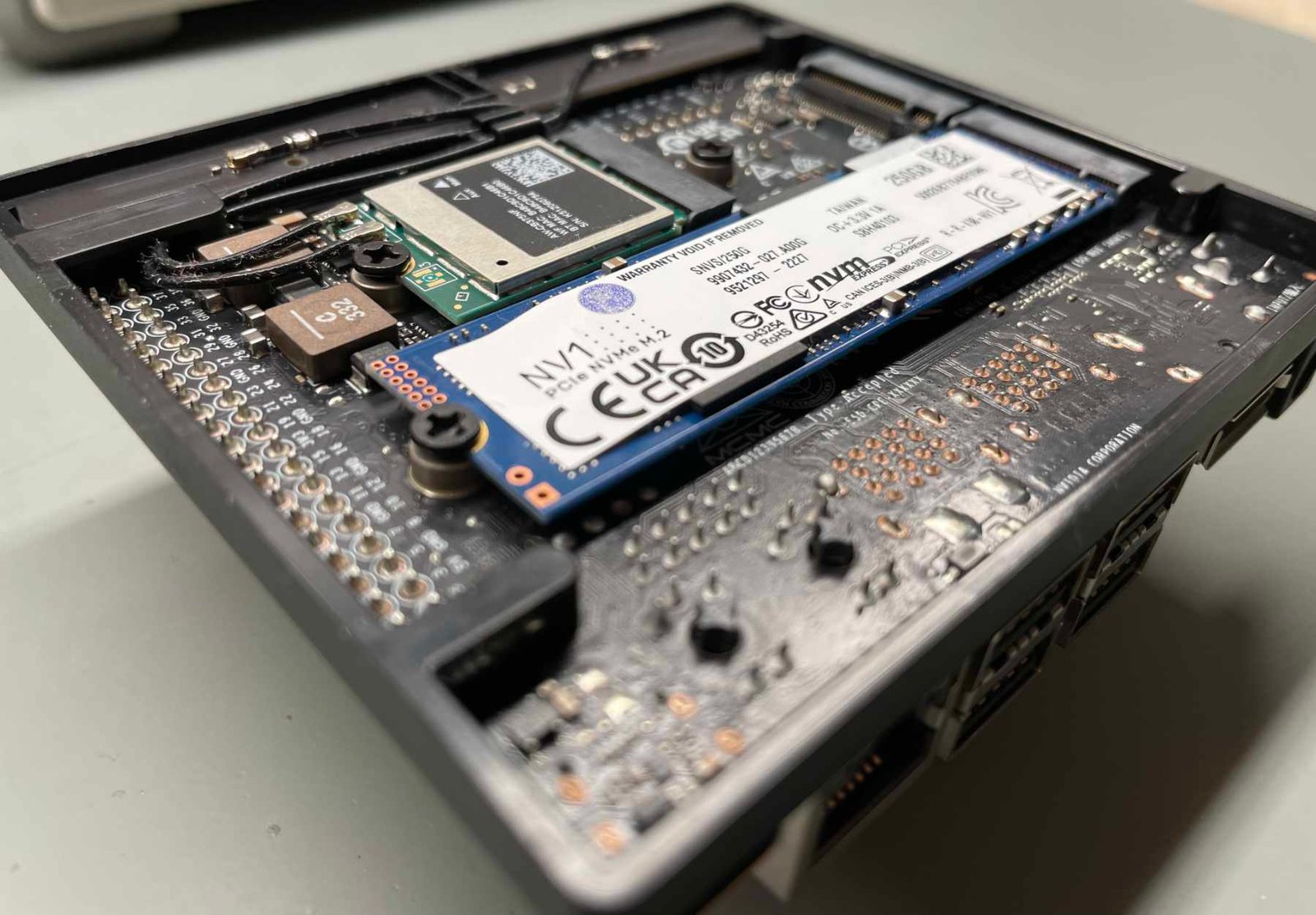

Flipping the board over reveals three more important connectors: two M.2 Key M slots (one of them is a PCIe x4 slot and the other’s a PCIe x2) and one M.2 Key E slot. The Key E slot is pre-populated with a wireless connectivity card, and the Key M slots both accept NVMe SSDs, which is brilliant (keeping in mind that only the PCIe x4-enabled M.2 slot accepts full-size drives due to space constraints).

The system’s bottom side exposes a niftily managed set of antennas

There are also two PCB antennas that are neatly cable managed and tucked away—more on this in a second.

It’s also worth mentioning the Jetson 12-pin button header: an extra set of pins for connecting power and reset buttons, and the on-board fan connector, which supplies the built-in fan with power.

Finally, the carrier board also supports Jetson Orin NX modules, which is a nice bonus for users who want to upgrade to a faster system down the road or who work with multiple Orin models simultaneously.

Hardware look and feel

The Jetson Orin Nano is hands-down one of the most polished SBCs we’ve ever tested. We repeat time after time that looks really do come last when tech like this is in question, but we have to say we’re impressed. There’s a small plastic “frame” in which the board itself sits. This elevates the board off the ground and shields any expansion cards placed beneath from dragging across the surface. This frame, as mentioned a second ago, holds two PCB antennas, and we are pretty sure these were the original motivation behind including it. NVIDIA decided to use a stock wireless solution instead of embedding one into the carrier board, which is more than adequate, especially when clever packaging manages to avoid antenna cable messes (which more often than not plague SBCs that forego this route).

The heatsink is quite chunky, but the fan is impressively quiet

The stock thermal management solution is also great. The metal heatsink does its job perfectly, and the fan is extremely quiet (seriously, it’s impressive—you can barely hear it spin when placing your ear right next to it).

All of this gives us the confidence to recommend keeping the Orin Nano out of any potential third-party cases (and this is something we generally recommend since we dislike keeping exposed SBCs sitting right on top of desks without some standoffs, at least). The build quality here is above and beyond what we normally expect; the new Jetson is ready to be placed right on top of your workbench.

Jetson Orin Nano AI performance

According to NVIDIA, the Jetson Orin Nano can deliver up to 40 TOPS of INT8 performance, compared to 0.472 TOPS of FP16 performance for the older Jetson Nano. NVIDIA claims an 80x performance increase, which feels about right. Keep in mind that we’re comparing FP16 performance on the older Nano to INT8 performance on the newer one, as the previous generation Nano didn’t have hardware INT8 support (due to the lack of tensor cores), so these two numbers might not be directly comparable in all situations.

There’s also a claimed 5.4x increase in CUDA performance (from 0.236 to 1.28 TFLOPS) and a 6.6x CPU performance increase compared to the original Nano. This is expected, especially when comparing the specs: the older Nano has only four A57 CPU cores, half the RAM, and only 128 CUDA cores.

We’ll more thoroughly compare the two soon, but let’s focus on the Orin Nano’s AI performance.

It’s also important to note that all of this fits within a 15 W power envelope, making the new Orin Nano extremely power efficient overall.

With all the claimed performance improvements out of the way, let’s begin the benchmarking! We decided to install a 256 GB Kingston NV1-series SSD on the bottom of the Jetson Nano and to move the filesystem from the SD card to it to avoid any would-be performance degradation caused by the card’s lower read speeds.

We first ran a set of AI benchmark models, which all returned impressive results. We also ran these on the original Jetson Nano and got the following scores:

There is a tremendous increase in performance here, really demonstrating how much more powerful the new system is.

Now, we were provided with some general expected benchmark numbers, and we’re happy to say that the system managed to exceed all of them, except in Action Recognition 2D. Somehow, every single time we ran this test, our Jetson Orin Nano reported a “system throttled due to over-current.” This message shows up when the system reaches its maximum power draw – but that makes sense, given that these benchmarks were designed to utilise every last drop of performance that the module can deliver.

The Orin Nano can also run Transformer AI models (these are the basis of generative AI; think ChatGPT or DALL-E), so we tested running NVIDIA’s PeopleNet Transformer model, a model for detecting, identifying, and tracking people in visual feeds. This model is quite hefty, and a ton of computing power is required to even run it, let alone get a usable frame rate. The Orin Nano nonetheless quickly and accurately identified subjects in the sample input video. On our Orin Nano, it ran at around 7.8 FPS, which is just as expected given the system’s theoretical performance. More powerful systems, like the AGX Orin, can get up to 30 FPS here, which approaches real-time for some videos, but that kind of performance is not always needed.

Jetson Orin Nano General Performance

While AI is where it truly shines, the Jetson Orin Nano is no slouch in general performance tasks, either. The six powerful A78AE cores really pull their weight here. Running at 1.5 GHz, they are lower-clocked than most other high-performance SBC CPU cores. However, the newer core technology used (A78AE is ARM’s latest and greatest industrial high-performance core) does help close—and even fully overcome—this gap, allowing the Orin Nano to outperform higher-clocked chips in certain tests.

As usual, we test performance using our standard set of benchmarks which we feel cover all major aspects of a chip’s performance.

Keep in mind that no benchmark is truly platform-agnostic or immune to third-party software influences. Many things can go wrong and skew results. This is why we suggest using synthetic benchmarks as general performance indicators and not absolute values.

Starting with Geekbench 5.4.0, we get a good 538 point score for single-core and an outstanding 2866 for multi-core performance, marking the best multicore Geekbench score we’ve ever seen in this test. Geekbench is a highly referent benchmark, utilising modern hardware to its full potential.

Continuing onto Sysbench CPU, a benchmark more reliant on clock speed, we see the lower-clocked A78AE cores struggling to keep up with the RK3588’s older but higher-clocked ones, producing a result which is just a tad behind.

ARM’s cryptography accelerator is somewhat non-standard in the way it shares its clock with the CPU core. Curiously, its design has remained consistent all the way back from the A72 cores, meaning that a higher-clocked A72 core would outperform a lower-clocked A78AE (or a regular A78 core).

When looking at cryptographic performance, we can see clear signs of a cryptographic accelerator being present on-board, but we can also see the effects of the 1.5 GHz clock speed. The Jetson Orin Nano trades punches with RK3399-based boards. Again, this isn’t a sign of weakness – any high-end ARM core clocked at around 1.5 GHz will perform roughly the same as the Orin Nano.

RAM performance in general is very good. Tested with tinymembench, we got rather respectable results. This benchmark tests RAM speeds as read from the ARM core, so processor speed does play a role in results, too.

LPDDR5 is a rare sight on SBCs, but definitely a welcome one. While it’s not being pushed to its theoretical limits here, this type of RAM allows for higher-bandwidth data transfers, especially when accessed by the GPU.

Unixbench also demonstrated some great results. While Unixbench also heavily relies on CPU clock speed due to its reliance on rather simple repeated operations, the Jetson Orin Nano managed to sneak in a few chart-topping scores in certain tests.

In our legacy and graphics tests, we got a bunch of chart-topping scores, including a new best Octane 2.0 score. All of these benchmarks are heavily software-reliant, with Octane and Bmark relying on browser GPU support (which usually is not great) and glmark2 and glxgears relying on a complicated library and driver backend. For running these tests we used OpenCV 4.5.0 with CUDA, which we complied on the system (the fact that we managed to compile OpenCV locally is also another testament to the raw power of the Orin, it’s a difficult process which is very taxing on the system, and the board handled it like a champ).

There’s a reason we retired these tests from our main benchmark suite – and their results should be treated as purely anecdotal. Still, somehow, their results curiously relate to real-world OS performance (perhaps precisely due to their reliance on the system setup).

Finally, we tested SSD read speed using hdparm, which returned another chart-topper at 1422.11 Mbps. Impressive stuff! The Jetson features full-speed M.2 slots, which isn’t the case with a lot of SBCs on the market nowadays.

While the Jetson Orin Nano performs great and delivers an incredible amount of performance, there is one notable omission: there are no NVENC hardware video encoders on-board! This seems like a strange oversight since the previous Jetson Nano had them. When we asked NVIDIA about it, they commented: “We saw many usecases were not using the encoder, so we made some architectural tradeoffs for the Orin Nano to bring the Orin architecture and more compute to Entry Level Applications. We do provide SW encoding via the CPU cores. With the CPU cores you can support up to 3 streams of 1080P30 using SW Encoding”.

This is a fair point – especially if it meaningfully lowered production costs. Performance seems to be only a tiny bit worse what the original Nano can offer. With the extra CPU headroom, we feel like most projects won’t miss out on too much. Still, it would have been nice to have the tech included for those few projects that do utilise it.

Finally, it’s clear that most of the raw performance losses stem from a lower base CPU clock. If the A78AE cores were clocked at around 2.4 GHz, we’re positive there wouldn’t be a single test in which any other SBC we’ve tested so far would have won. While part of the reason for the lower CPU clock probably stems from market segmentation, we truly believe that the final decision was guided by power usage. The entire system uses just 15 W (which can be configured down to 7 W), and efficiency is essential in edge systems. Processors also generally offer better performance per watt at lower clock speeds, which further helps achieve undoubtedly great performance while retaining low power usage.

Software Support

NVIDIA’s done an amazing job with software support, bringing the full AI software stack to the Jetson. The same software that powers all NVIDIA-accelerated computing hardware is here: Jetson seamlessly integrates with Omniverse Replicator, TAO, Isaac Sim, and all other of their SDKs and software in the field.

The idea here is simple: while you can use the Jetson Orin Nano as a standalone platform, it shines when used in conjunction with a more powerful machine running NVIDIA’s full set of tools for creating, training, and managing models, and then deploying the model and running the inference on the Jetson.

NVIDIA provided us with a demo TAO workflow that uses Isaac Sim to generate artificial training data. We were also provided with a cloud instance to run this workflow on, as it requires some serious computing power.

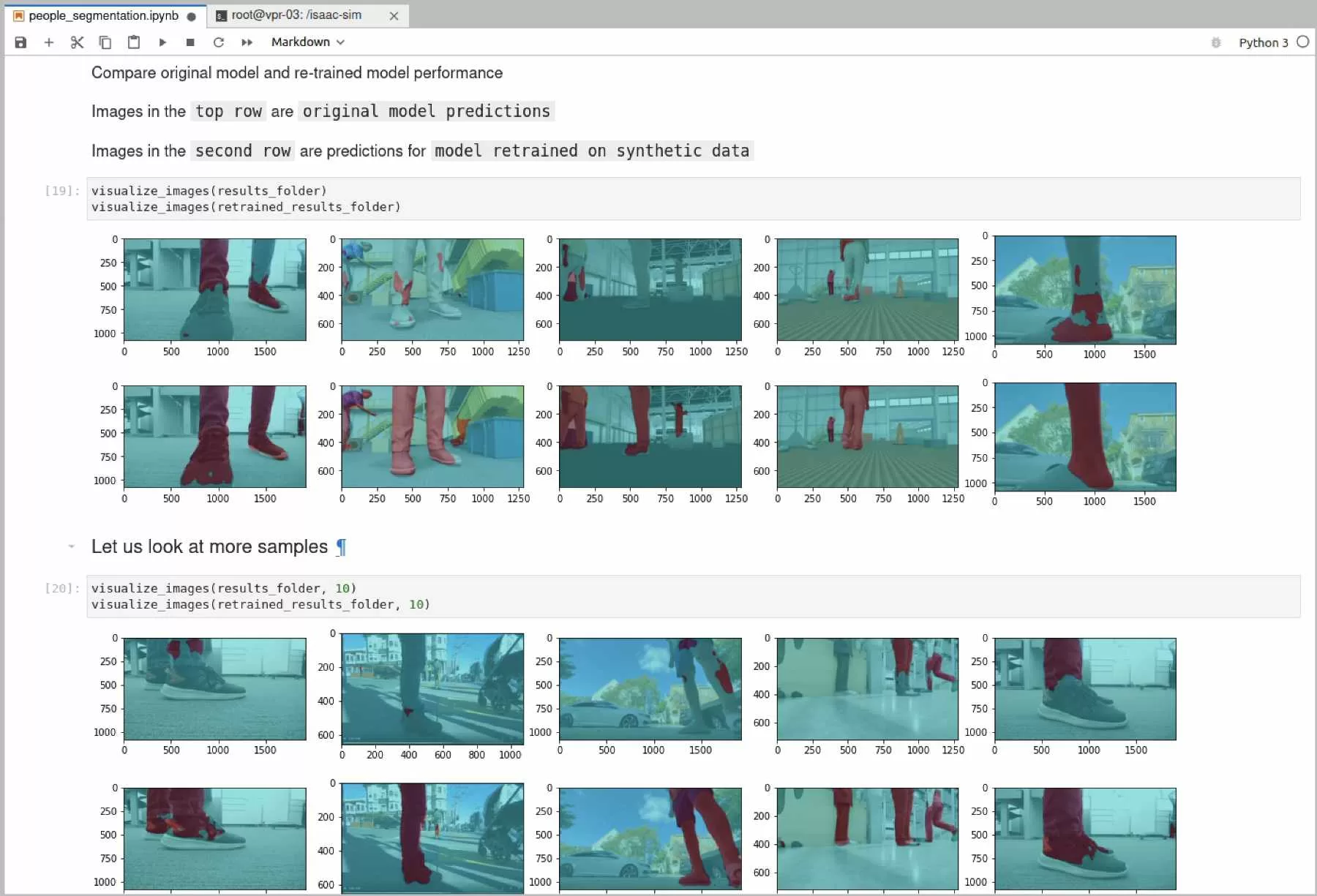

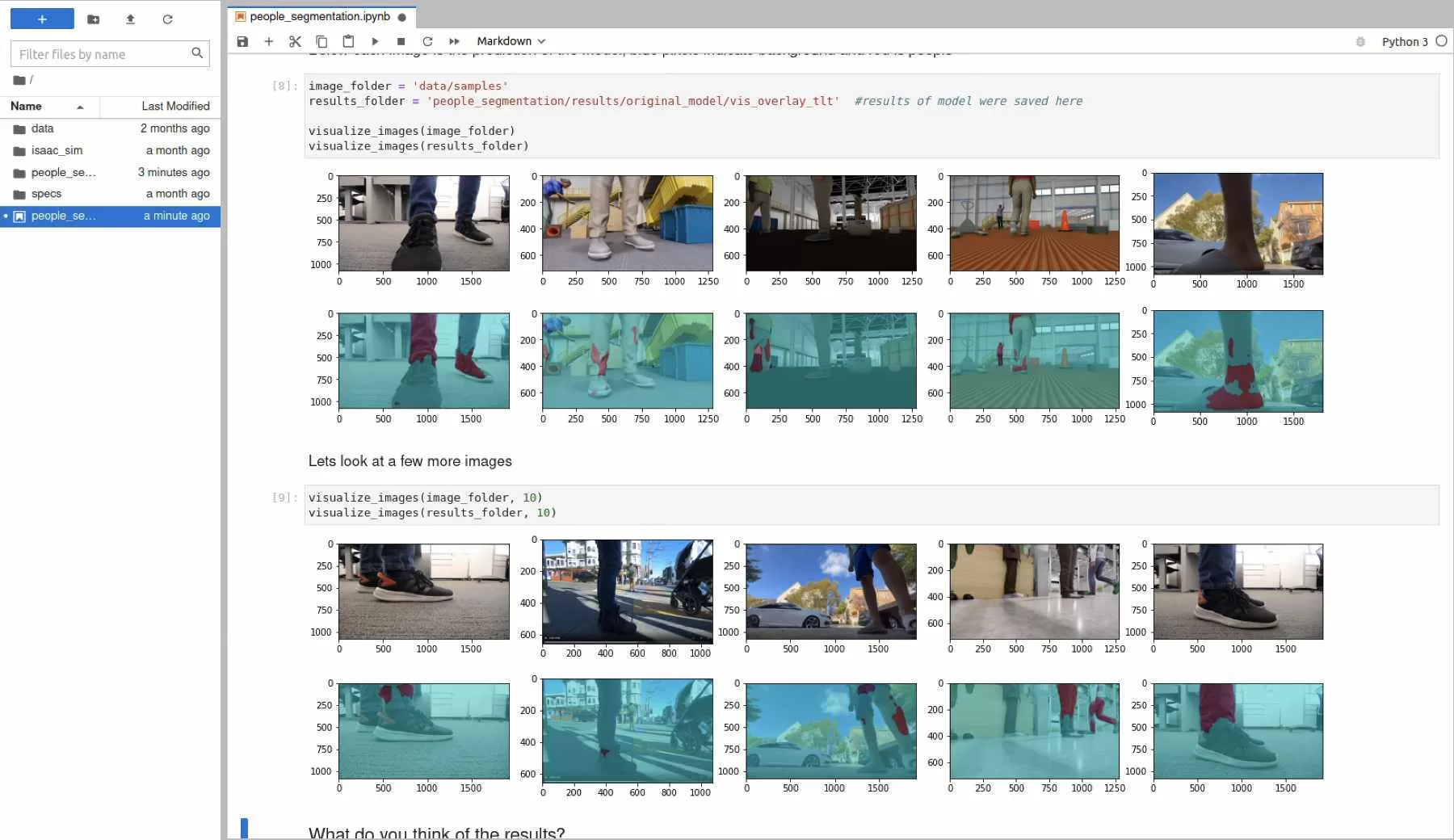

Honestly, we were quite impressed with the ease of use of the workflow. Back at GTC 2023, NVIDIA unveiled a new TAO version that further simplified every part of model training. As we went through a Jupyter Notebook, we quickly managed to pull the pre-trained model, run it, and notice the issues with the original data set (it didn’t cover all the needed camera angles).

Thus, we ran Isaac Sim to generate synthetic training data and train the model further for more accurate people detection (after all, the model we’re working with is supposed to help autonomous robots avoid collisions with people’s legs), so accuracy here is crucial!

After generating the data and training the model with it (and being impressed how simple it is to do this—TAO basically runs with next to no code required, and the commands themselves are pretty simple), we had a way more accurate model that detected legs really well (hooray!).

Upon validating the performance, we exported the trained-up model and put it on the Jetson Orin Nano to run it. And it ran great! We had real-time performance at 30 FPS running the model with rare frame drops. It was impressive—the Jetson truly proved how well integrated it is with NVIDIA’s extensive AI toolkit.

For those wanting a fully self-contained experience, the Jetson Orin Nano is a fully-featured AI workstation, too. TensorRT, PyTorch, TensorFlow, and many more projects can be created right on the device, with hardware acceleration enabled by excellent SDKs available on-board.

The same software is also used across all Orin modules, meaning that code and projects are portable, further speeding up prototyping.

Jetson Orin Nano vs. Jetson Nano

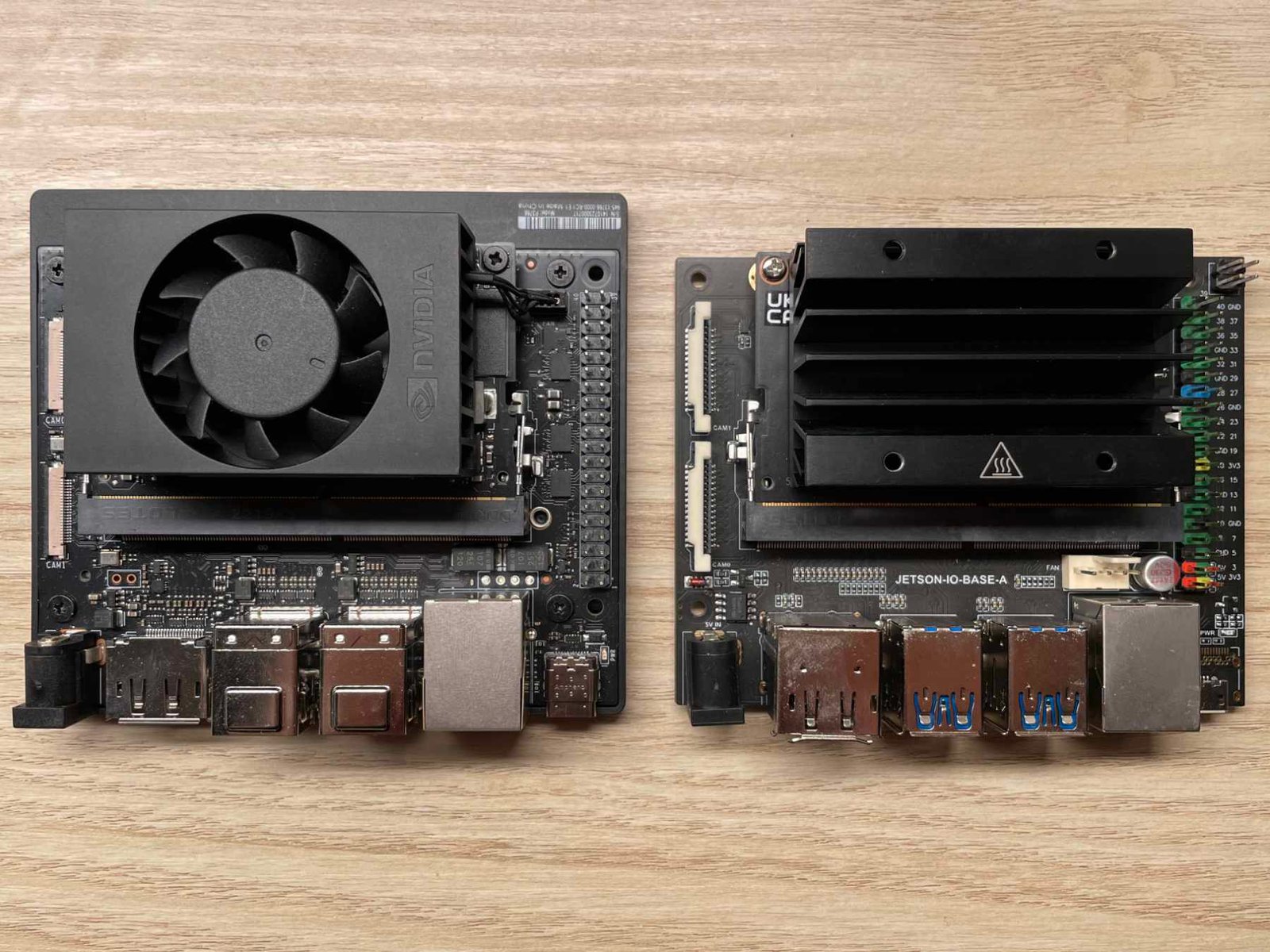

The two Nanos are alike only in name, really. As stated before, these two should not be directly compared as they serve vastly different markets. The Jetson Nano remains NVIDIA’s $149 entry-level AI platform for education and makers, while the Jetson Orin Nano sits in a class above, being closer in performance to older but higher-end Jetson TX2 and Xavier NX-based systems, intended for more serious projects and industrial deployment.

The two systems side-to-side; new Orin Nano’s on the left

The original Jetson Nano features a Tegra X1 SoC with 4 Cortex A57 cores clocked at 1.47 GHz, 128 Maxwell GPU cores, and 4 GB of LPDDR4 RAM. This is quite less powerful than its newer sibling. Just looking at the specs side-to-side shows just how far edge technology has progressed in the past few years.

Performance differences are also night-and-day. We ran NVIDIA’s AI benchmarking suite on the older Jetson Nano too, as well as some tests from our regular selections of benchmarks, and we found stark differences across the board.

Starting with Geekbench 5, we can see almost a five-times increase in performance.

Sysbench CPU reports slightly less dramatic results – the Orin Nano gets “only” about twice the score (again, since the differences between the old Nano’s A57 and A78AE cores show way more in higher-level tests).

Being rather old cores (the A57 is the predecessor of the A72 cores), the A57’s crypto accelerator is much slower, leading to noticeably worse OpenSSL performance.

Having slower and significantly lower bandwidth memory also impedes the original Nano’s performance somewhat; tinymembench results illustrate the performance differences rather well.

Finally, we ran glmark2, which gave us some interesting results. Again, a lot is down to software here, but some performance differences generally exist. GLMark2 notoriously “steals” points at higher scores, so more of a difference is noticeable when running the test in 4K and 1080p resolutions rather than in the native 800 x 600 resolution. These three scores aren’t a direct indication of the best possible graphical performance obtainable from either of the systems, but the Orin Nano nevertheless managed to almost double the score of its predecessor at its best.

However, the original Jetson Nano still has a few tricks up its sleeve. It’s significantly less expensive, for starters, making it much more accessible to makers just trying out the AI waters. It’s also lighter on power consumption, using just 7 W which can be configured down to just 3 W. For edge projects with a focus on power source longevity, this might be a deciding factor – even though the new Orin Nano offers much better performance-per-watt.

Finally, the inclusion of the NVENC encoder capable of encoding one 4K30, two 1080p60, four 1080p30, and so on, up to nine 720p30 streams, is rather useful (again, the newer model manages up to three 1080p30 streams using software encoding). This does mean that the older Jetson Nano could outperform the newer model in some video pipelines.

Conclusion

If it wasn’t clear already, we really love the new Jetson Orin Nano Developer Kit. It isn’t just a powerful AI computer capable of mighty feats; it’s also one of the fastest SBCs we’ve tested and the graphically most powerful one. Not only that, it’s got even more powerful software.

Great software and great hardware really do work in tandem on this one. NVIDIA provides users with amazing tools and amazing support, allowing for AI development to truly be fast and easy.

We had to knock a few points off for lack of some hardware, but it doesn’t detract from the sense of awe at the level of performance obtainable from such a tiny little device.

A bit steeper in price than most other SBCs (retailing for $499), this developer kit also offers more than most. For those just getting started with AI, we’re inclined to suggest first picking up the $149 Jetson Nano, but for those already well-versed in the field and ready to tackle more demanding projects, getting the new Jetson Orin Nano should be a must.

- Clockwork Pi DevTerm review - 04/24/2024

- Youyeetoo X1 review - 04/09/2024

- Orange Pi 5 Plus review - 01/25/2024